Rebuilding AKS Clusters

Re-building a cluster

When re-building a cluster, remove the given cluster from application gateway if there is a 00 and 01 setup, and then delete the cluster manually in portal. You will have to remove the lock on resource group if applied.

To re-build the cluster you need to run the pipeline in a given environment, and for the given cluster you deleted:

Click on Run pipeline (blue button) in top right of Azure DevOps. Ensure that Action is set to Apply, Cluster is set to whichever one you want to build and that the environment is selected from the drop down menu.

Click on Run to start the pipeline.

Common Steps

Before deployment of a cluster

- Merge PR to remove a cluster from Application Gateway

After deployment of a cluster

- Add the cluster back into Application Gateway once you have confirmed deployment has been successful. PR example here.

Environment Specific

Dev/Preview, AAT/Staging

- Change Jenkins to use the other cluster that is not going to be rebuilt

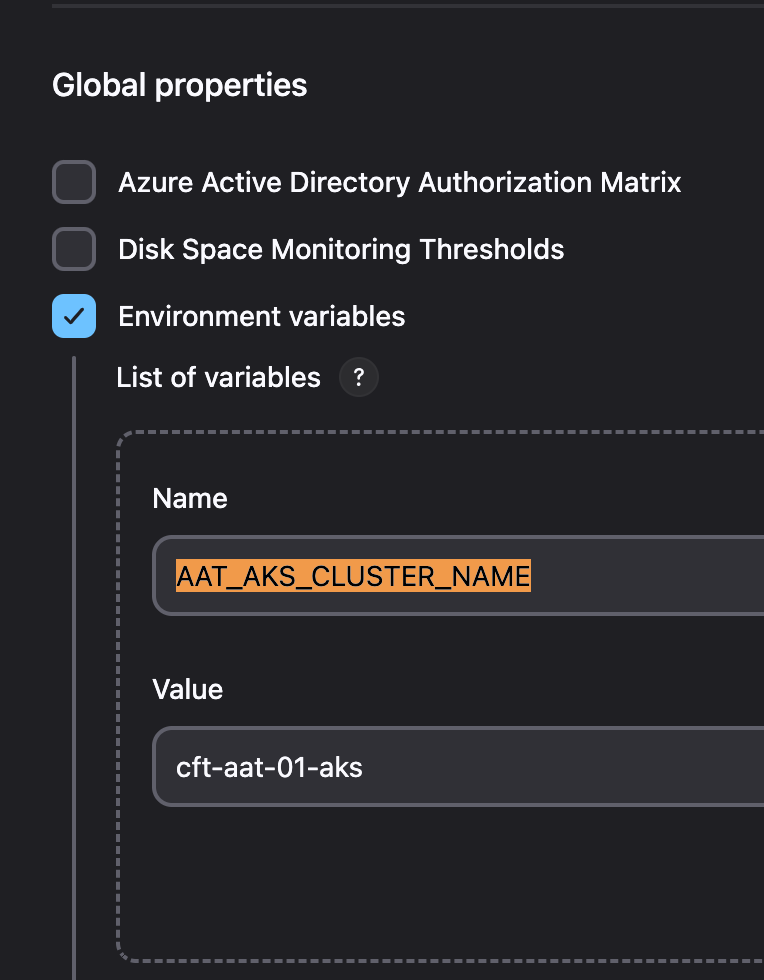

If the configuration doesn’t update after your pull request has been merged, you can change it manually in the Jenkins Configuration

You will find the same Environment variables for the PRs shown above in this configuration page:

After deployment of a cluster

- Change Jenkins to use the other cluster, e.g. cnp-flux-config#19886 .

- After merging above PR, IF Jenkins is not updated, check Here, you MUST manually change Jenkins config to use the other cluster.

- If it is a preview environment, swap external DNS active cluster, example PR

- If it is an AAT Environment, you will need to update the Private DNS record to point to the new cluster

- There are 2 locations for this change:

- The private DNS entries should be set to the kubernetes internal loadbalancer IP (Traefik).

- See common upgrade steps for how to retrieve the IP address"

- Create a test PR, normally we just make a README change to rpe-pdf-service.

- Example PR. Check the PR in Jenkins has successfully run through the stage AKS deploy - preview

- Send out comms on

#cloud-native-announceSlack channel regarding the swap over to the new preview cluster, see below example announcement:-

Hi all, Preview cluster has been swapped cft-preview-00-aks.

You can log in using:

az aks get-credentials --resource-group cft-preview-00-rg --name cft-preview-00-aks --subscription DCD-CFTAPPS-DEV --overwrite

- Delete all ingress on the old cluster to ensure external-dns deletes its existing records:

kubectl delete ingress --all-namespaces -l '!helm.toolkit.fluxcd.io/name,app.kubernetes.io/managed-by=Helm'

- Delete any orphan A records that external-dns might have missed:

- Replace X.X.X.X with the loadbalancer IP (kubernetes-internal) of the cluster you want to cleanup

Private DNS

az network private-dns record-set a list --zone-name service.core-compute-preview.internal -g core-infra-intsvc-rg --subscription DTS-CFTPTL-INTSVC --query "[?aRecords[0].ipv4Address=='X.X.X.X'].[name]" -o tsv | xargs -I {} -n 1 -P 8 az network private-dns record-set a delete --zone-name service.core-compute-preview.internal -g core-infra-intsvc-rg --subscription DTS-CFTPTL-INTSVC --yes --name {}

az network private-dns record-set a list --zone-name preview.platform.hmcts.net -g core-infra-intsvc-rg --subscription DTS-CFTPTL-INTSVC --query "[?aRecords[0].ipv4Address=='X.X.X.X'].[name]" -o tsv | xargs -I {} -n 1 -P 8 az network private-dns record-set a delete --zone-name preview.platform.hmcts.net -g core-infra-intsvc-rg --subscription DTS-CFTPTL-INTSVC --yes --name {}

Delete any TXT records pointing to inactive

# Private DNS

az network private-dns record-set txt list --zone-name preview.platform.hmcts.net -g core-infra-intsvc-rg --subscription DTS-CFTPTL-INTSVC --query "[?contains(txtRecords[0].value[0], 'inactive')].[name]" -o tsv | xargs -I {} -n 1 -P 8 az network private-dns record-set txt delete --zone-name preview.platform.hmcts.net -g core-infra-intsvc-rg --subscription DTS-CFTPTL-INTSVC --yes --name {}

# Public DNS

az network dns record-set txt list --zone-name preview.platform.hmcts.net -g reformmgmtrg --subscription Reform-CFT-Mgmt --query "[?contains(txtRecords[0].value[0], 'inactive')].[name]" -o tsv | xargs -I {} -n 1 -P 8 az network dns record-set txt delete --zone-name preview.platform.hmcts.net -g reformmgmtrg --subscription Reform-CFT-Mgmt --yes --name {}

Once the swap over is fully complete then you can delete the old cluster

- Comment out the inactive preview cluster.

- Example PR.

- Once the PR has been approved and merged run this pipeline

Perftest

Before deployment of a cluster

- Confirm that the environment is not being used with Nickin Sitaram before starting.

- Use slack channel pertest-cluster for communication.

- Scale the number of active nodes, increase by 5 nodes if removing a cluster. Reason for this CCD and IDAM will auto-scale the number of running pods when a cluster is taken out of service for a upgrade.

After deployment of a cluster

- Check all pods are deployed and running. Compare with pods status reference taken pre-deployment

Known issues

Neuvector

admission webhook "neuvector-validating-admission-webhook.neuvector.svc" denied the request:, these alerts can be seen onaks-neuvector-<env>slack channels- This happens when neuvector is broken.

- Check events and status of neuvector helm release.

- Delete Neuvector Helm release to see if it comes back fine.

- Neuvector fails to install.

- Check if all enforcers come up in time, they could fail to come if nodes are full.

- If they keep failing with race conditions, it could be due to backups being corrupt.

- Usually

policy.backupandadmission_control.backupare the ones you need to delete from Azure file share if they are corrupt.

Dynatrace oneagent pods not deployed or failing to start

NOTE: The issue described here need to be validated if still applies when the updated Dynatrace Operator is rolled out.

For a rebuild or newly deployed cluster, Dynatrace oneagent pods are either not deployed by Flux or where deployed, fails with a CrashLoopBackOff status.

Dynatrace Helm Chart requires CRDs to be applied before installing the chart. The CRDs currently need to be manually applied as they are not part of the existing Flux config.

Run the below command on the cluster. An empty result confirms CRDs are not installed.

kubectl get crds | grep oneagent

To fix, run the below command to apply CRDs to the cluster:

kubectl apply -f https://github.com/Dynatrace/dynatrace-oneagent-operator/releases/latest/download/dynatrace.com_oneagents.yaml

kubectl apply -f https://github.com/Dynatrace/dynatrace-oneagent-operator/releases/latest/download/dynatrace.com_oneagentapms.yaml