Dynatrace Patching Example

Context

We use the dynatrace-operator helm chart to deploy our instances of Dynatrace. This supports the rollout and lifecycle management of both Activegate and Oneagent in Kubernetes.

Before patching, you need to check the latest available version. Make sure to read through the release notes between the current version and latest, to see if there are any breaking changes, or pre-reqs before an upgrade.

For example, when upgrading from 0.12 -> 0.15, there is a note in 0.13 about containerd version required. To check this, you can run:

kubectl get nodes -A -o wide

Patching process

Dynatrace is deployed through flux-config, upgrading involves a PR to flux to change version numbers.

In this case, as there were no breaking changes or config changes, I applied to base in SDS first and then CFT after all successful. If there are things you want to test in an upgrade, you can apply this to one nonprod environment first using kustomize. There is a good example of applying CRDS to a certain env in the traefik patching docs.

Monitoring the upgrade

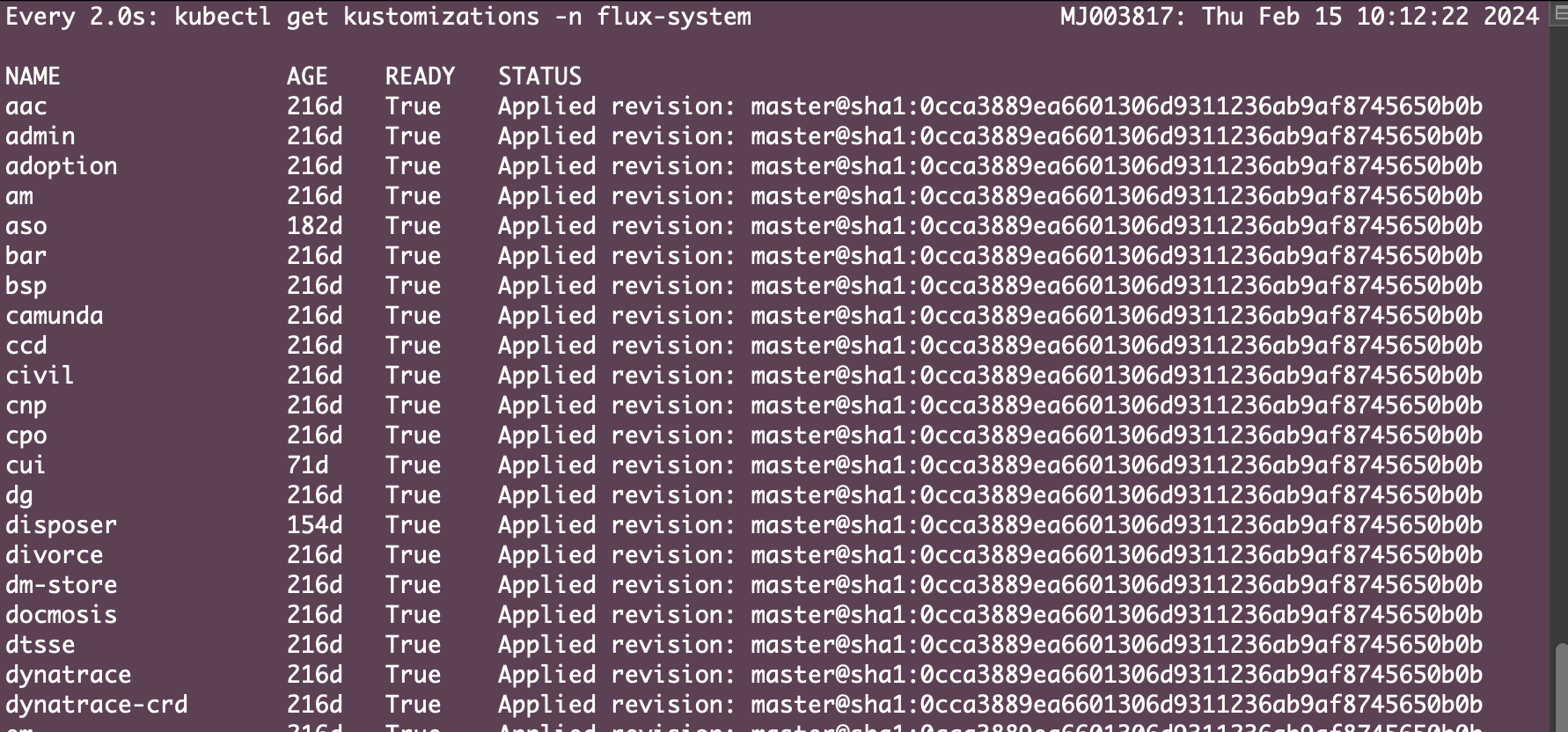

Once the PR to flux is merged, you can check if the cluster has picked it up yet by running:

kubectl get kustomizations -n flux-system

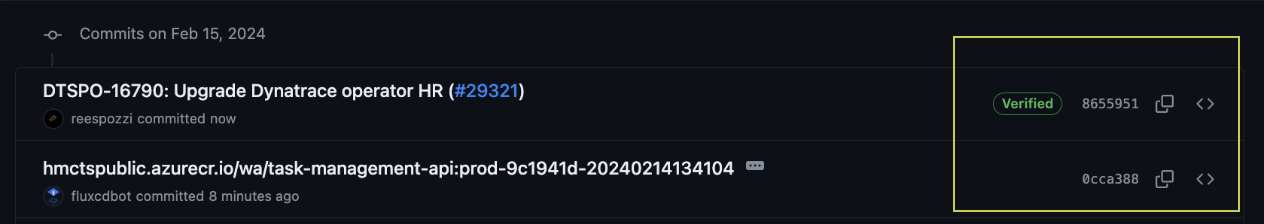

You can see the hash from flux-config here to see if your commit has been picked up yet.

Below, you can see below my revision is yet to be applied, wait for it to be applied before you start checking more closely on the deployment.

Below, you can see below my revision is yet to be applied, wait for it to be applied before you start checking more closely on the deployment.

Once the revision is applied, flux will try to upgrade the HR to pick up the latest version, you can see this by running:

kubectl get hr -n dynatrace

Whilst this is happening, I like to have a few windows open running the following commands to see how things are going. This builds on the principle of failing fast and you can see quickly if something is not working, so you can revert your changes if needed.

Checking pods are being deployed healthily on the new version:

watch kubectl get pods -n dynatrace

Checking events in the namespace in case of failures or errors:

kubectl get events --sort-by=".lastTimestamp" -n dynatrace

Usually, you will see if the deployment is successful through helm, before kubectl will report back to you. If all goes well, you can check this is deployed successfully using the following commands:

kubectl get hr -n dynatrace

helm ls -a -n dynatrace

Troubleshooting

After patching to version 1.1.0 there was an issue where the CRDs would create other resources, Dyntrace has fixed this but when the HR would would try to deploy it would still complain about existing resources. If you see this you can remove the HR and wait for it to come back, if it fails then straight away remove the following CRDS

If the helm release fails to install, you can check the kustomize pods logs and grep for dynatrace, you might mind for example that it cannot find the webhook. The following commands have been used to work around this.

First delete the CRDs, use Helm to install the HR pointing to the image on our repository to pull values and then reconcile Flux.

kubectl delete crds dynakubes.dynatrace.com edgeconnects.dynatrace.com

helm upgrade dynatrace-operator dynatrace/dynatrace-operator --install --version 1.5.1 --set imageRef.repository=hmctspublic.azurecr.io/imported/dynatrace/dynatrace-operator

flux reconcile kustomization dynatrace -n flux-system

If the upgrade is failing, you will see an error when the pods are starting up, or in the events of the namespace. You should revert your upgrade PR and look into those logs to see what other changes may be needed.

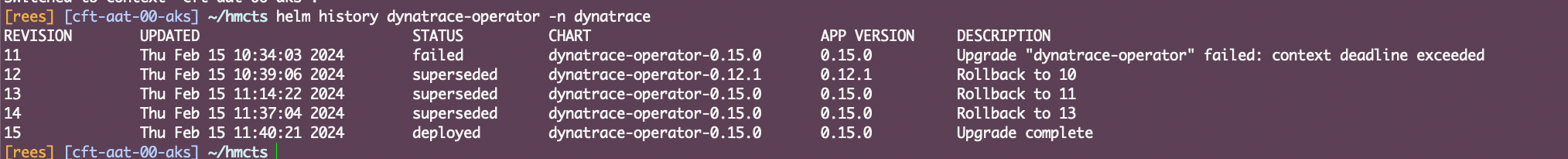

If there are no errors in the pods or events, but you find that the helm upgrade is just timing out, you can take the below approach to reconcile things. You can see attempted revisions/upgrades using helm history:

helm history dynatrace-operator -n dynatrace

In the example, you can see that a timeout occurred upgrading from 0.12 to 0.15:

To try this again, you can run the below command to retry the revision which attempted to deploy the upgraded version, in this case revision 11.

helm rollback dynatrace-operator 11 -n dynatrace

It may take a few times of doing this if you keep seeing a context deadline exceeded error.

Edge case

Somehow, it may get into a state where helm shows the latest chart version as deployed, but kubectl shows it in a “False” state. This happens when kubectl has hit it’s max retries, but you have manually used helm rollback to get the deployment into the correct state. You can use kubectl edit, to increase the retries temporarily, and flux should reconcile the kubectl reply to show the correct status. Flux will reset this back to the original value for retries after a few minutes.