Data Boarding

Prerequisites

- Be onboarded to the HMCTS Github Organisation

- Get a member of your team to add you to azure-access or raise a platops-help ticket.

GitHub Overview, Repositories and Pull Requests

Overview

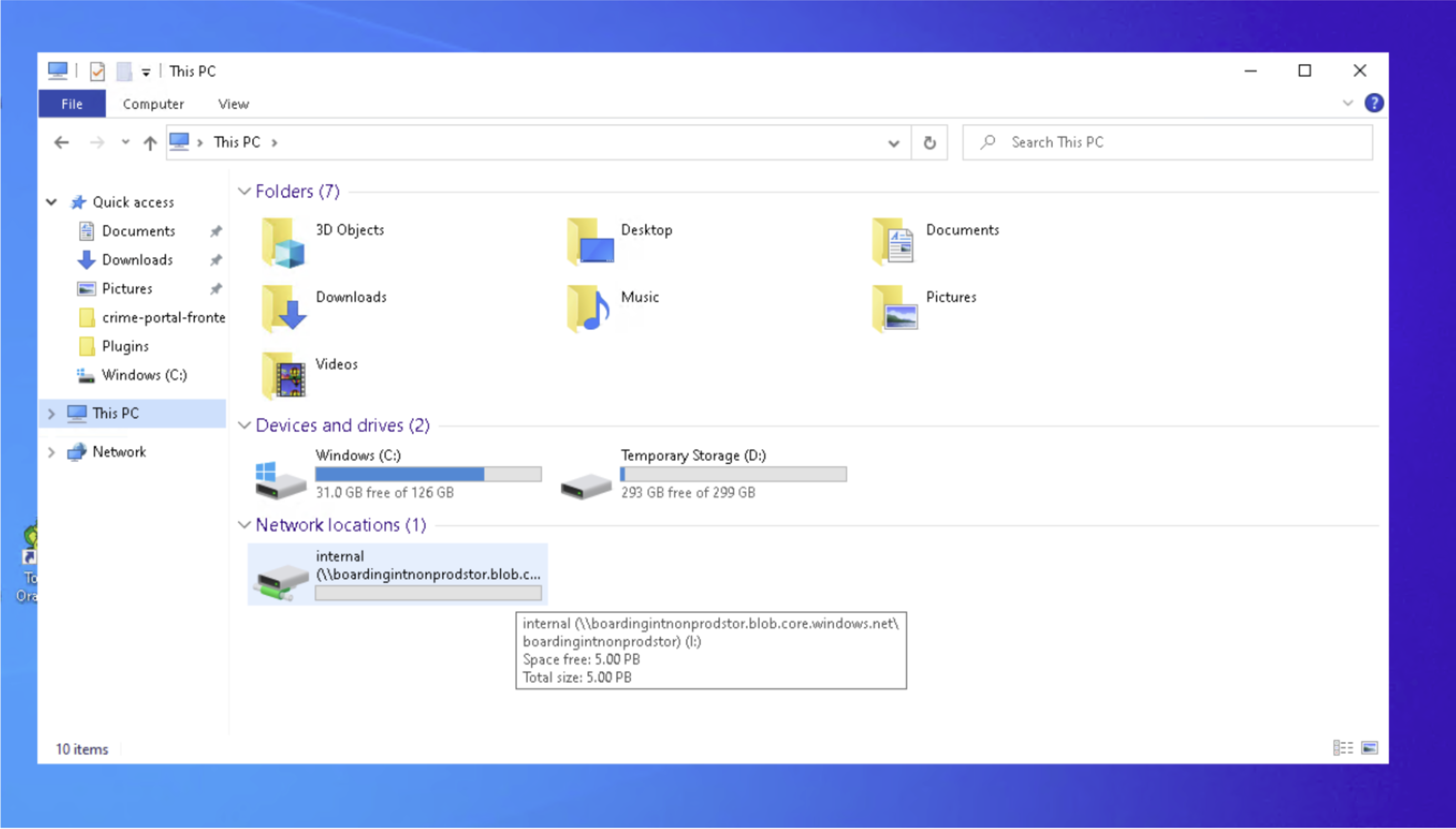

A total of four storage accounts have been deployed, an internal and an external account in both the NonProd and Prod environments. The external account is directly accessible from RDWeb and is enabled for SFTP. The internal account is mounted as a network drive to the Jumpbox for that environment.

These accounts allow the transfer of data in and out of the HMCTS Azure environments with an audit trail.

Copying Data from RDWeb to the external storage accounts

Both the production and non-production external storage accounts can be accessed via SFTP from RDWEB. The connection strings are below:

- Nonprod: boardingextnonprodstor.blob.core.windows.net

- Prod: boardingextprodstor.blob.core.windows.net

Usernames and passwords have already been shared with CGI - if these have been lost or this is a new application please raise a platops-help ticket.

Upload files or folders as you would to any other SFTP server. Data will be retained for 30 days before it is automatically removed.

Copy data between internal/external storage accounts

To copy data between the external and internal storage accounts there is an automated, config-driven process. Configuration is via YAML files in the data-config folder of the hmcts/ops-jumpboxes GitHub repository.

An example configuration is below:

boarding:

- path: ProjectX/my-db-import.pgdump

source:

environment: prod

account: external

destination:

environment: prod

account: internal

completed: false

The first line defines a list of boarding objects, this will always be required.

boarding:

The path property of a configuration refers to where the source folder/file is in the source storage account. This is where you would have uploaded/copied your file to.

- path: ProjectX/my-db-import.pgdump

The source object defines which environment and account you want to transfer file from. Valid values for environment are prod or nonprod. Valid values for account are external or internal.

source:

environment: prod

account: external

The destination object defines which environment and account you want to transfer to. The format is the same as the source object.

destination:

environment: prod

account: internal

The completed property is used by the automation to determine whether a given config file has been actioned or not. The automation will update config files with completed: true once it has successfully transferred the file/folder.

completed: false

Copying data to/from internal storage accounts

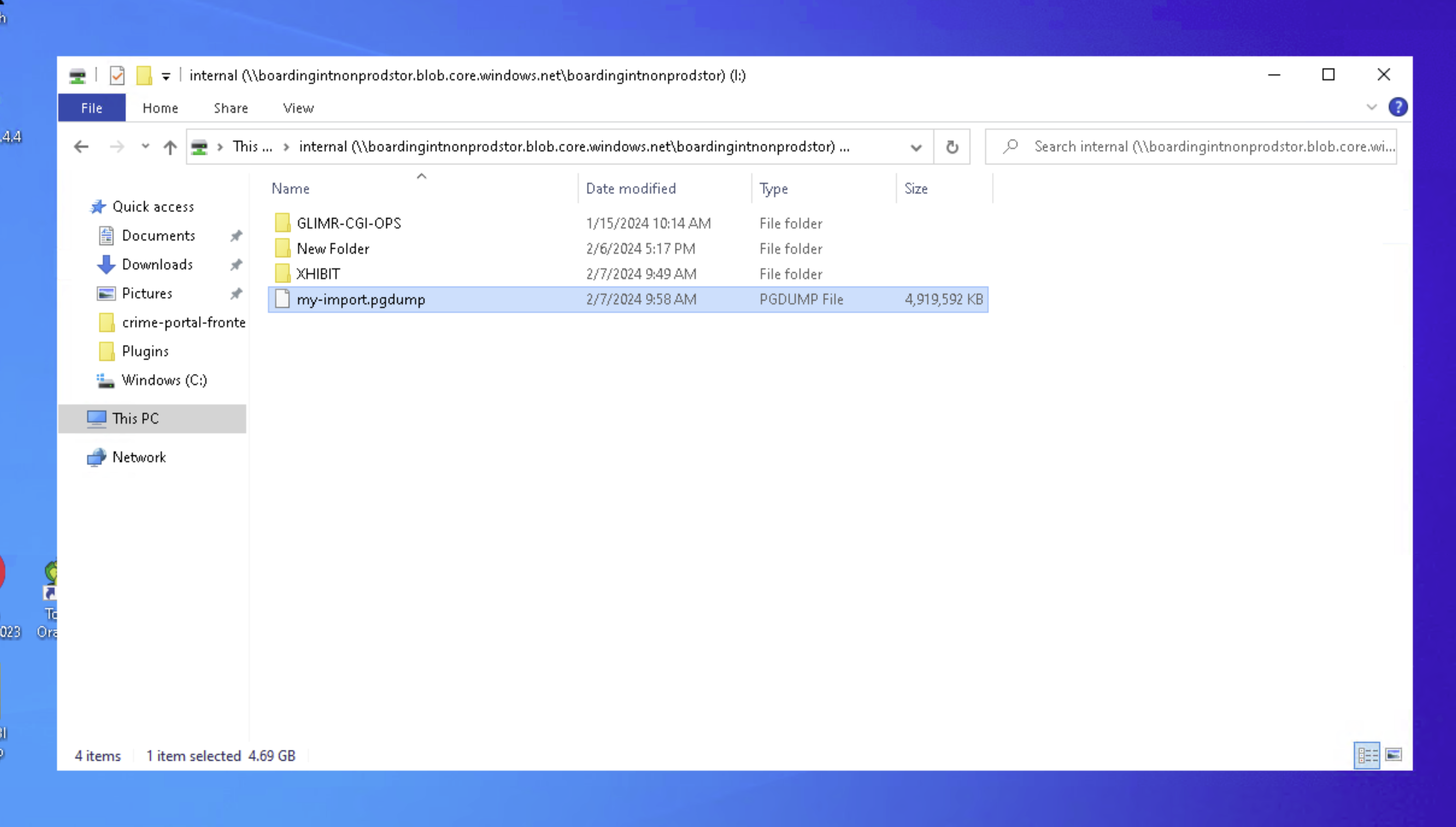

The internal storage account is mounted as a network drive on the Jumpbox for that environment. Data can be copied and pasted to this as if it was a local disk on the Jumpbox.

Examples

External to Internal

I need to copy a my-import.pgdump file from RDWeb through to the non-production Jumpbox, where I can then import it into an Azure PostgreSQL Flexible server.

- SFTP toboardingextnonprodstor.myusername@boardingextnonprodstor.blob.core.windows.net

- Upload my-import.pgdump file

- Navigate to hmcts/ops-jumpboxes

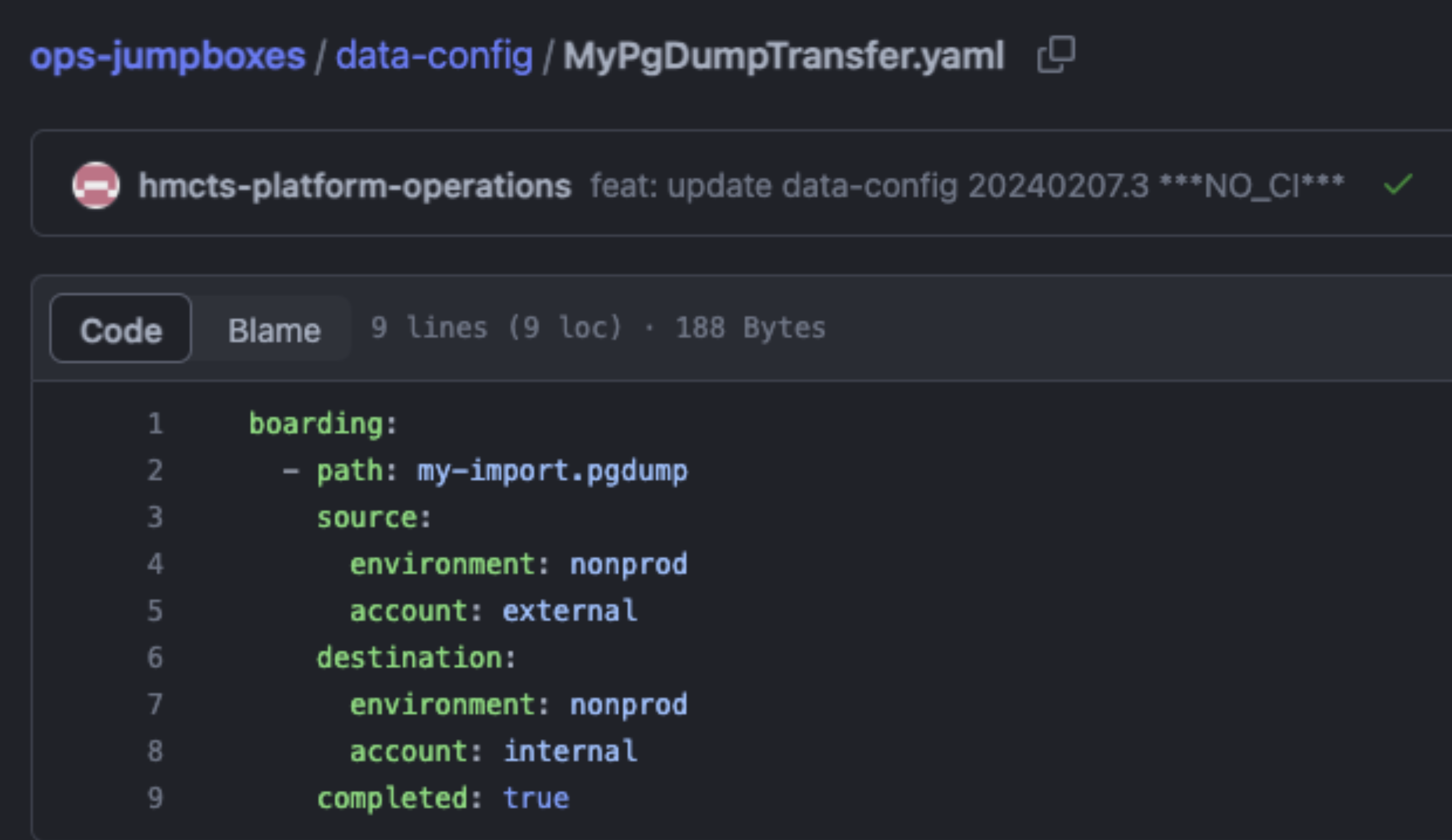

- Create a MyPgDumpTransfer.yaml the name of this file doesn’t matter

- Add the contents:

boarding:

- path: my-import.pgdump

source:

environment: nonprod

account: external

destination:

environment: nonprod

account: internal

completed: false

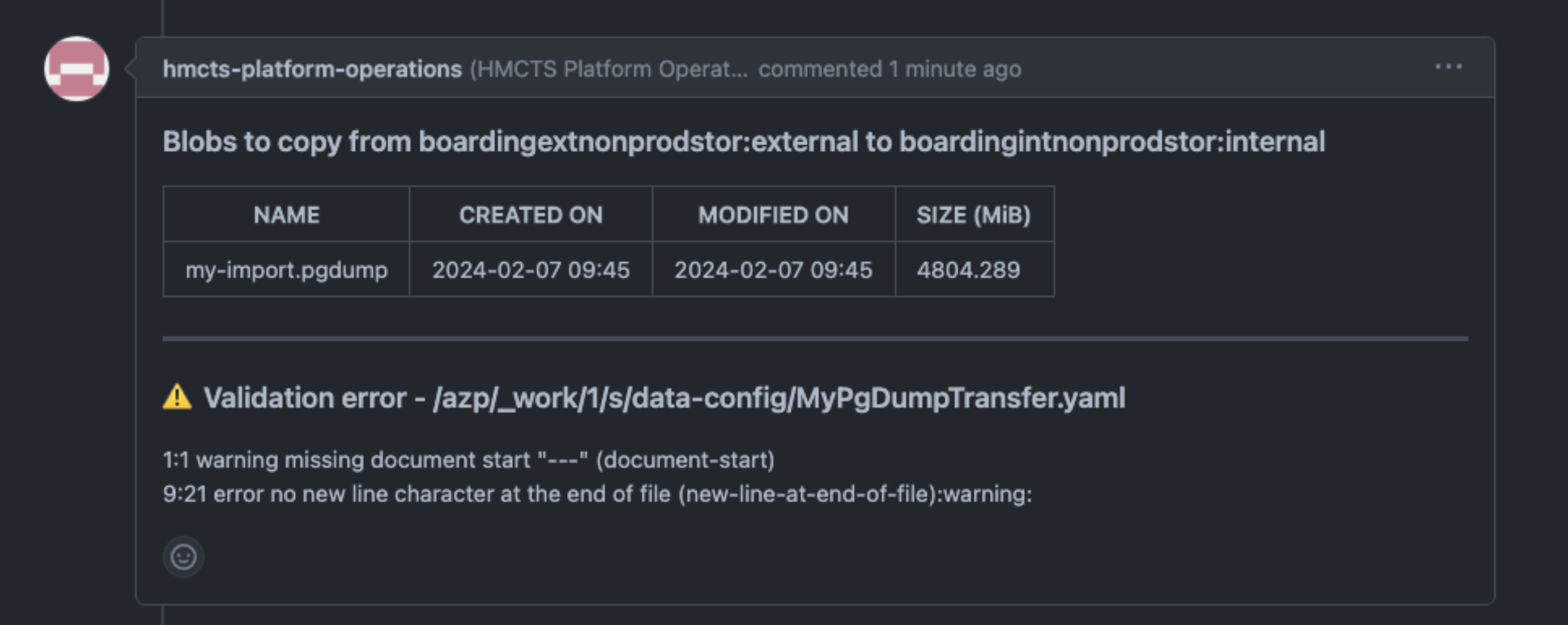

- Raise a pull request, this will validate your configuration and give information about what the automation will do. If your configuration file was not picked up or has validation errors a comment will be added to your pull request with the details of any errors. If your config is valid it will detail the files to be transferred.

- Merge the pull request and wait for the automation to pick up the files. You can view your config file in the data-config folder and check that the completed flag has been set to true

- Your file will be present in the internal store.