Troubleshooting issues

Table of Contents

- GitHub

- Receiving an email regarding inactive account

- Jenkins

- Debug Application Startup issues in AKS

- F5 VPN not connecting or services not available over VPN that should be

- Flux and Gitops

- Connecting to AKS Clusters

- Golden Path

GitHub

Adding a new user on GitHub

- See GitHub Onboarding for how to get access

- Make sure the user has accepted the invite received on the email setup in Github or by visiting github.com/hmcts.

Creating a new GitHub repository

See create a GitHub repository

Access to GitHub Repo

- All repositories should be administered by the team who owns it.

- Access should be managed through GitHub teams and not individuals.

- If no one from your team have access, follow asking for help to request org admins in one of the community/ support channels.

Receiving an email regarding inactive account

You may have received an email from our scheduled pipeline which deletes guest user accounts if they’re inactive for longer than 31 days.

Account close to being deleted

Users who are within a week of being deleted get notified via email on the lead up to the deletion date, starting 7 days before.

If you have received an email about your account being close to being deleted because of inactivity, that is because you haven’t logged into the CJS Common Platform Azure Tenant for a while and need to do so now to keep your access.

Please don’t ignore this email if you use the CJS Common Platform Tenant or GitHub as you will lose access to both if you don’t log in.

To extend your access, visit the CJS Common Platform Azure portal.

Login link: CJS Common Platform

Note: We’re looking into fixing this soon so that as long as you’re active in either GitHub or the CJS Common Platform Tenant you will not lose access.

Account has been deleted

If your account has been deleted and you need it re-enabled, you need to create a ticket via slack in the #platops-help channel and someone will send you another guest invite.

Once that is done you will then be able to get your GitHub access back by asking one of your team members to re-add you following person onboarding.

Jenkins

Jenkins is unavailable

- Check if there is a planned outage in “#cloud-native-announce”

- Please note Jenkins could be temporarily unavailable while rolling out a change, give it a few minutes before you raise an issue.

Cannot login to Jenkins

- Login to Jenkins is managed by Microsoft Entra ID, please make sure user is added to right Microsoft Entra ID groups

You are now logged out of Jenkins/ Infinite loop when trying to login to Jenkins

- Please try clearing browser cookies on login.microsoft.com

Cannot see my new repo in Jenkins org / Dashboard

- See Jenkins Onboarding section to add your app to Jenkins Org / Dashboard

Cannot see my branch/PR or This project is currently disabled in Jenkins

- Any branches which are also filed as PRs are not listed as a branch, they will only be listed in pull requests section.

- Branch/ PR is not listed if its last commit creation date is older than 30 days.

Sonar scan cannot find default branch

You may get the error below, when the pipeline has not ran on the master/main branch first.

When you run it on the master/main branch it will setup the default branch and then the pull request build will start working.

[2021-09-16T10:59:54.154Z] Execution failed for task ':sonarqube'.

[2021-09-16T10:59:54.154Z] > Could not find a default branch to fall back on.

Sonar scan timeout

- Please see sonarcloud status for any known issues with sonar cloud.

- Remember that Platform Operation do not maintain SonarCloud, issues are usually discussed on community forums.

Sonar scan quality gate failure

If you receive this error: Pipeline aborted due to quality gate failure: NONE on master, try a re-run of the pipeline. This may simply be an intermittent issue caused by sonarcloud or because the GitHub repo has only just been created and this is the first time you’re running the pipeline.

Build / Docker Build / Unit Test failure

- If your build is failing in these stages, it’s most likely to fail in your local as well. Look at the first line of the Jenkins step that fails and try run the same command Jenkins is running.

- Try on a colleague’s machine as you might have cached something locally.

Helm Upgrade Failed, Helm Release timed out waiting for condition, Helm Release Failure

- See Connecting to AKS Clusters and connect to the relevant cluster.

- These errors usually mean your pods didn’t start as expected in time.

- It could be that they are stuck in

Pending,ContainerCreatingstatus or might be failing to startup leading toCrashLoopBackOffstatus. - Follow Debug Application Startup issues in AKS to troubleshoot further

Jenkins managed helm releases / pods are automatically deleted

- To maintain the health of the cluster, it is important to cleanup unwanted pods regularly.

- Helm release is cleared for PRs which are merged or closed.

- Helm release of PRs raised by dependency bots (based on

dependencieslabel) is cleared once the functional tests pass. - Helm release on AAT Staging is also cleared once functional tests pass.

- For optimal usage, You can also configure your pipeline to clear Helm releases on successful build.

- A scheduled pipeline runs every hour to clear any helm releases which are not updated in last 3 days. Teams can do more frequent cleanup by overriding in the cleanup script

Smoke / Functional test failure

- Jenkins only sets secrets as environment variables and runs the

gradle/yarntask to run tests. - Access the URL on VPN and try running tests manually using the

TEST_URLprinted in the logs. - You can also run tests locally by setting the required secrets while on the VPN.

- To add additional logging, see Example config.

Terraform/ Build Infrastructure failure

- It is important that you need to review your plan in a pull request before applying it to an environment.

- Please check if its an intermittent failure with Azure as a retry could fix it.

- Also, see if there are any open issues/discussions on community channels for the failure.

- There could be open GitHub issues on terraform/ azurerm, so googling it could help as well.

Using branches to troubleshoot issues

If your pipeline is throwing an error, you may be able to more easily troubleshoot the issue by using a branch in the cnp-jenkins-library repo.

Example 1

Gradle is failing because a plugin cannot be found in artifactory. You’ve checked the plugin exists and there are no typos in your code. You can check if the issue lies with artifactory by temporarily bypassing it using a branch.

And then referencing that branch within your repo’s Jenkinsfile

In this example, the issue is network related. This may be down to routing in Azure or traffic being blocked by a firewall.

Example 2

Gradle is failing a functional test. To help get more information on why, you could update the Gradle logging level in a branch of cnp-jenkins-library

And then reference this branch within your repo’s Jenkinsfile

Error message channel_not_found

When you want to send Jenkins pipeline notifications to a slack channel, you must make sure the Jenkins app has been added to the channel.

If you don’t do this, you will receive the channel_not_found error message and the pipeline will fail.

See the instructions on the slack section of the onboarding guide to find out how to do this.

If you are getting this message on a PR pipeline and the error also says Failed to notify @U1234ABCD, that means your github username has been mapped to an invalid slack id.

Find your slack id by clicking on View profile within the slack app, then click on the three dots and click Copy member ID.

Update your github to slack user mapping in this file and try running the pipeline again.

Sandbox Jenkins is not automatically picking up my changes

Because we have a prod and sandbox Jenkins instance, sometimes your pushes to master may be picked up by prod Jenkins instead.

If this happens, simply run the master build manually on sandbox jenkins.

Debug Application Startup issues in AKS

There could be many reasons why applications could fail to startup like :

- A secret referred in helm chart is missing in keyvaults

- Pod identity is not able to pull keyvault secrets due to missing permissions

- There is not enough space in the cluster to fit in a new pod.

- Pod is scheduled, but fails to pass readiness (

/health/readiness) or liveness (/health/liveness) checks. - A misconfigured environment variable, example - incorrect URL of a dependent service.

Below are some handy kubectl commands to debug the issues

To check latest events on your namespace:

kubectl get events -n <your-namespace>To check status of pods:

kubectl get pods -n <your-namespace> | grep <helm-release-name> #Examples # kubectl get pods -n ccd | grep ccd-data-store-api # kubectl get pods -n ccd | grep pr-123To check status of a specific pod which is not running

kubectl describe pod <pod-name> -n <your-namespace>To check logs of pods which is not starting

kubectl logs <pod-name> -n <your-namespace> #To follow logs kubectl logs <pod-name> -n <your-namespace> -f # To check previous pod logs if its restarting kubectl logs <pod-name> -n <your-namespace> -p

F5 VPN not connecting or services not available over VPN that should be

Normally connecting to the VPN at portal.platform.hmcts.net should work without issues.

Occasionally there are issues where the VPN will hang on connecting or will connect but certain services will not be available over the VPN.

The most common solution is to disable IPv6 on your device. This is because the F5 VPN has not been configured properly for IPv6 but services behind Azure Front Door are available over IPv6.

The process to disable IPv6 will depend on your operating system, please choose the correct link below based on what your device uses:

If that doesn’t work then make sure you’ve applied the latest operating system updates and tried restarting your device. If you’re still having issues then please raise a ticket in the #platops-help channel.

Flux and Gitops

Always check why your release or pod has failed in the first instance. Although you may have permissions to delete a helm release or pod in a non-production environment, use this privilege wisely as you could be hiding a potential bug which could also occur in production.

Latest image is not updated in cluster

- Start with checking cnp-flux-config to make sure flux has updated/ committed the image.

- If image hasn’t been committed to Github, see Flux did not commit latest image to Github.

If flux has committed the new image to Github, check if the

HelmReleasehas been updated by Flux. Run below command and check that the image tag has been updated in the outputkubectl get hr -n <your-namespace> <your-helm-release-name> -o yamlIf Image is not updated in above, Change in git is not applied to cluster.

If the image tag is updated and still application pods are not deployed, see Updated HelmRelease is not deployed to cluster

Flux did not commit latest image to Github

- Image automation is run from management cluster (CFTPTL). Please login to cftptl cluster before further troubleshooting.

- Image reflector controller keeps polling ACR for new images, but it should generally update the new image in 10 minutes.

Check status of

imagerepositoriesand verify the last scan.kubectl get imagerepositories -n flux-system <repository name(usually helm release name)>If the last scan doesn’t update, check image reflector controller logs to see if there any logs related to the helm repo.

kubectl logs -n flux-system -l app=image-reflector-controller --tail=-1 # search for specific image kubectl logs -n flux-system -l app=image-reflector-controller --tail=-1 | grep <Release Name>If the last scan is latest, check

imagepolicystatus to verify that the image returned matches the expectation.kubectl get imagepolicies -n flux-system <policy name(usually helm release name)>If it doesn’t match the expected tag, verify image reflector controller logs as described above.

If the

imagepolicyobject returned shows the expected image, but it didn’t commit to Github, check image automation controller logs.kubectl logs -n flux-system -l app=image-automation-controller # search for specific image kubectl logs -n flux-system -l app=image-automation-controller | grep <Release Name>

Updated HelmRelease is not deployed to cluster

- Helm operator queues all the updates, so it could take up to 20 minutes sometimes to be picked up.

Check HelmRelease status to see the status.

kubectl get hr -n <namespace> <Release Name>Look at helm operator logs to see if there are any errors specific to your helm release

kubectl logs -n flux-system -l app=helm-controller --tail=1000 | grep <Release Name>If you see any errors like,

status 'pending-install' of release does not allow a safe upgrade". You need to deleteHelmReleasefor fixing this, request help from Platform Operations if you do not have permissions.kubectl delete hr <helm-release-name> -n <namespace>In most cases, helm release gets timed out with an error in log similar to

failed: timed out waiting for the condition. This usually means application pods didn’t startup in time and you need to look at your pods to know more.Check the latest status on helm release and if it has already been rolled back to previous release.

kubectl describe hr <helm-release-name> -n <your-namespace>If you are looking at pods after a long time,

HelmReleasemight have been rolled back and you won’t have failed pods. Easiest way is to add a simple change like a dummy environment variable in flux-config to re-trigger the release and debug the issue when it occurs.If your old pods are still running when you check, follow Debug Application Startup issues in AKS to troubleshoot further.

Change in git is not applied to cluster

To check if latest github commit has been downloaded by checking status

kubectl get gitrepositories flux-config -n flux-systemIf the commit doesn’t match latest id, verify source controller logs to see any related errors

kubectl logs -n flux-system -l app=source-controllerIf commit id is recent, verify status of flux kustomization for your namespace to get the version of git applied.

kubectl get kustomizations.kustomize.toolkit.fluxcd.io -n flux-system <namespace>If the above status doesn’t show latest commit/ show any error , see kustomize controller logs to find relevant errors.

kubectl logs -n flux-system -l app=kustomize-controller

# search for specific image

kubectl logs -n flux-system -l app=kustomize-controller | grep <namespace>

Connecting to AKS Clusters

- By Default, all developers have read access to non-prod AKS clusters and slightly higher privileges to their namespaces.

- You can connect to AKS clusters using

az aks get-credentials. Below are some handy commands:

# Sandbox

az aks get-credentials --resource-group ss-sbox-00-rg --name ss-sbox-00-aks --subscription DTS-SHAREDSERVICES-SBOX

az aks get-credentials --resource-group ss-sbox-01-rg --name ss-sbox-01-aks --subscription DTS-SHAREDSERVICES-SBOX

# Dev

az aks get-credentials --resource-group ss-dev-01-rg --name ss-dev-01-aks --subscription DTS-SHAREDSERVICES-DEV

# Staging

az aks get-credentials --resource-group ss-stg-00-rg --name ss-stg-00-aks --subscription DTS-SHAREDSERVICES-STG

az aks get-credentials --resource-group ss-stg-01-rg --name ss-stg-01-aks --subscription DTS-SHAREDSERVICES-STG

# Test

az aks get-credentials --resource-group ss-test-00-rg --name ss-test-00-aks --subscription DTS-SHAREDSERVICES-TEST

az aks get-credentials --resource-group ss-test-01-rg --name ss-test-01-aks --subscription DTS-SHAREDSERVICES-TEST

# ITHC

az aks get-credentials --resource-group ss-ithc-00-rg --name ss-ithc-00-aks --subscription DTS-SHAREDSERVICES-ITHC

az aks get-credentials --resource-group ss-ithc-01-rg --name ss-ithc-01-aks --subscription DTS-SHAREDSERVICES-ITHC

# Demo

az aks get-credentials --resource-group ss-demo-00-rg --name ss-demo-00-aks --subscription DTS-SHAREDSERVICES-DEMO

az aks get-credentials --resource-group ss-demo-01-rg --name ss-demo-01-aks --subscription DTS-SHAREDSERVICES-DEMO

# Prod (Requires additional permissions)

az aks get-credentials --resource-group ss-prod-00-rg --name ss-prod-00-aks --subscription DTS-SHAREDSERVICES-PROD

az aks get-credentials --resource-group ss-prod-01-rg --name ss-prod-01-aks --subscription DTS-SHAREDSERVICES-PROD

# SDSPTL (Prod management)

az aks get-credentials --resource-group ss-ptl-00-rg --name ss-ptl-00-aks --subscription DTS-SHAREDSERVICESPTL

Once you have logged in, you can switch between clusters using kubectx or below kubectl commands:

kubectl config use-context cft-perftest-00-aks

kubectl config use-context cft-aat-00-aks

Golden Path

NodeJS Errors

- URL.canParse is not a function

TypeError: URL.canParse is not a function

at parseSpec (/usr/lib/node_modules/corepack/dist/lib/corepack.cjs:23025:21)

at loadSpec (/usr/lib/node_modules/corepack/dist/lib/corepack.cjs:23088:11)

at async Engine.findProjectSpec (/usr/lib/node_modules/corepack/dist/lib/corepack.cjs:23262:22)

at async Engine.executePackageManagerRequest (/usr/lib/node_modules/corepack/dist/lib/corepack.cjs:23314:24)

at async Object.runMain (/usr/lib/node_modules/corepack/dist/lib/corepack.cjs:24007:5)

Node.js v18.16.0

Solution

Bump the node version in .nvmrc to 18.17

- After(build) is deprecated

after(build) is deprecated, consider using 'afterSuccess', 'afterFailure', 'afterAlways' instead This change is enforced from 30/01/2023

Solution

Update references in any Jenkinsfiles in your repo to afterSuccess(build)

- Yarn security vulnerabilities

Error

Security vulnerabilities were found that were not ignored.

Solution

In your local git repo, run yarn install to install the packages contained in your package.json.

Yarn v3 stores the packages within the repo in the .yarn/cache folder.

You can run yarn info to get a flow diagram output showing the packages and the dependencies they contain.

This should help you determine which packages contain vulnerable dependencies.

You can send the output of this command to a file for easier reading in your IDE: yarn info > /tmp/yarn-deps.txt.

To upgrade the dependencies, you can update the version in the package.json file manually.

Search npmjs for the package name to find the latest version.

You can also run yarn upgrade-interactive and select the package that needs updated with the arrow keys on your keyboard and hit Enter.

This will update the package.json file too.

Because the packages are stored within the repo, you need to run yarn install again before committing the changes to GitHub.

If you don’t run yarn install after updating the package.json file, you will receive an error in the pipeline about yarn install changing the lockfile, which is forbidden.

If a new version of the affected package has not yet been released, you can temporarily ignore the issue by running:

yarn npm audit --recursive --environment production --json > yarn-audit-known-issues

This is a temporary measure and all packages must be updated when new versions are released to ensure security vulnerabilities are mitigated.

The Renovate tool should raise pull requests automatically when a new package version is released. You can simply approve this change and merge the PR to mitigate the vulnerabilities.

- Yarn test failures

Error

Page / › should have no accessibility errors.

Solution

This error means the accessibility test for the root page (/) is failing. This often happens if the govuk-frontend package is outdated or if its template files aren’t correctly set up in your project. To fix it, update govuk-frontend to the latest version and ensure the GOV.UK template is in your views directory.

1) Update govuk-frontend to the latest Version

yarn add govuk-frontend@latest

2) Move the GOV.UK Template to the Views Directory

mkdir -p src/main/views/govuk

mv node_modules/govuk-frontend/dist/govuk src/main/views/

3) Run the a11y test to check if the issue is resolved:

yarn test:a11y

- Linting and Prettier Issues

Error: Code Style Violations or Formatting Issues

ESLint: Unexpected token (error)

Prettier: Code style issues found in the following file(s)

Solution

1) Run ESLint to Fix Linting Errors

yarn lint --fix

This will attempt to resolve common issues like incorrect syntax, unused variables, or improper indentation based on your ESLint configuration.

2) Use Prettier to reformat all files in the src/ directory to match the project’s style guide:

yarn prettier --write src/

3) Verify fixes

yarn lint

Helm chart is deprecated

Error

Version of nodejs helm chart below 3.1.0 is deprecated, please upgrade to latest release https://github.com/hmcts/chart-nodejs/releases This change is enforced from 30/06/2024

In your git repo, open charts/labs-YourGithubUsername-nodejs/Chart.yaml and update the nodejs dependency to the minimum version from the error message:

apiVersion: v2

appVersion: '1.0'

description: A Helm chart for labs-YourGithubUsername-nodejs App

name: labs-YourGithubUsername-nodejs

home: https://github.com/hmcts/labs-YourGithubUsername-nodejs

version: 0.0.7

dependencies:

- name: nodejs

version: 3.1.1

repository: 'https://hmctspublic.azurecr.io/helm/v1/repo/'

Remember to increment the version of your chart as well e.g. from 0.0.7 to 0.0.8.

Non-whitelisted pattern found in HelmRelease

Error

!! Non whitelisted pattern found in HelmRelease: apps/labs/labs-YourGithubUsername-nodejs/labs-YourGithubUsername-nodejs.yaml it should be prod-[a-f0-9]+-(?P<ts>[0-9]+)

Solution

In the flux config repo, after running the create-lab-flux-config.sh script, you should have the following files under apps/labs/labs-YourGithubusername-nodejs:

- labs-YourGitbubUsername-nodejs.yaml

- image-policy.yaml

- image-repo.yaml

In the labs-YourGithubusername-nodejs.yaml file, you will see a value for image under values/nodejs.

This will be pointing to the docker image stored in Azure Container Registry (ACR).

If all the previous steps of the tutorial worked as expected, the tag on this image should be something like prod-[a-f0-9]+-(?P<ts>[0-9]+).

If the tag does not match this pattern, you will receive the above error when you submit your PR to the flux config repo.

Check the ACR via the Azure Portal or via az acr commands in your terminal to see if an image with the right tag exists:

az acr manifest list-metadata hmctssandbox.azurecr.io/labs/YourGithubusername-nodejs

If a tag with the right pattern does not exist, make sure your Jenkins pipeline has passed as it should create an image with the right tag.

You can enter the tag manually in the labs-YourGithubusername-nodejs.yaml file and push it to your branch.

As long as the pattern matches, the tests should pass and you can merge your PR after approval.

Azure Front Door - Our Services are Unavailable - 502 Error

Error

On browsing to your application you receive:

Our services aren't available right now. We're working to restore all services as soon as possible. Please check back soon. 0tEdHXAAAAAADUxvBayGtQLDTjRthnz9XTE9OMjFFREdFMDMyMQBFZGdl

Solution

This is likely the result of missing or incorrect DNAT rules on Azure Firewall. Review the tutorial guide to make sure you’ve submitted and merged a PR to add this in code.

Check the pipeline passed after merging. If the pipeline failed, it’s possible the rules were not created.

Check the IP your rule is forwarding to. It should be the private IP of the frontend Application Gateway.

You can find this here.

Terraform state lock

Error

Sometimes when Terraform apply runs in one of the Azure Devops pipelines, it may fail with following (or similar) error:

│ Error message: state blob is already locked

│ Lock Info:

│ ID: <lock-id>

│ Path: subscription-tfstate/UK South/hub/hub-terraform-infra/sbox/hub_infra/terraform.tfstate

│ Operation: OperationTypeApply

│ Who: vsts@fv-az635-78

│ Version: 1.10.1

│ Created: 2025-01-07 16:02:25.188636401 +0000 UTC

│ Info:

This issue usually occurs when the pipeline has failed or has been cancelled after the plan phase and before the lock release phase has been run. To fix this the lock has to be released manually.

Solution

Identify the storage account used by the Terraform pipeline, this is usually contained within Terraform Init phase like so:

/home/vsts/.local/bin/terraform init -backend-config=storage_account_name=<account-name>Identify the .tfstate file path, this is usually given in the Terraform error message in the Apply phase like so:

│ Error message: state blob is already locked │ Lock Info: │ ID: <lock-id> │ Path: subscription-tfstate/UK South/hub/hub-terraform-infra/sbox/hub_infra/terraform.tfstateIn Azure Portal find the Storage Account by searching for all resources using the name identified in Step 1

Follow the path identified in Step 2 until you find find the terraform.tfstate - it will have an active lease, right click and select “Break Lease” which will release the lock

Given you have released the lock from the correct terraform state file the next pipeline run should now pass

Java (Spring Boot) Golden Path Errors

- Dependencies security vulnerabilities can be resolved by updating the version

We recommend to use the OWASP Dependency Checker. The checker provides monitoring of the project’s dependencies and creates a report of known vulnerable components that are included in the build.

Solution

To resolve security vulnerabilities flagged by the OWASP Dependency Check plugin that can be resolved by upgrading dependencies, you can follow this chain of commands. The goal here is to update the flagged dependencies to their latest safe versions:

Step 1: Run Dependency Check to Identify Vulnerabilities

./gradlew dependencyCheckAnalyze --info

If your project already has the Jenkins CI pipeline set up, you can run the dependency check by triggering the pipeline.

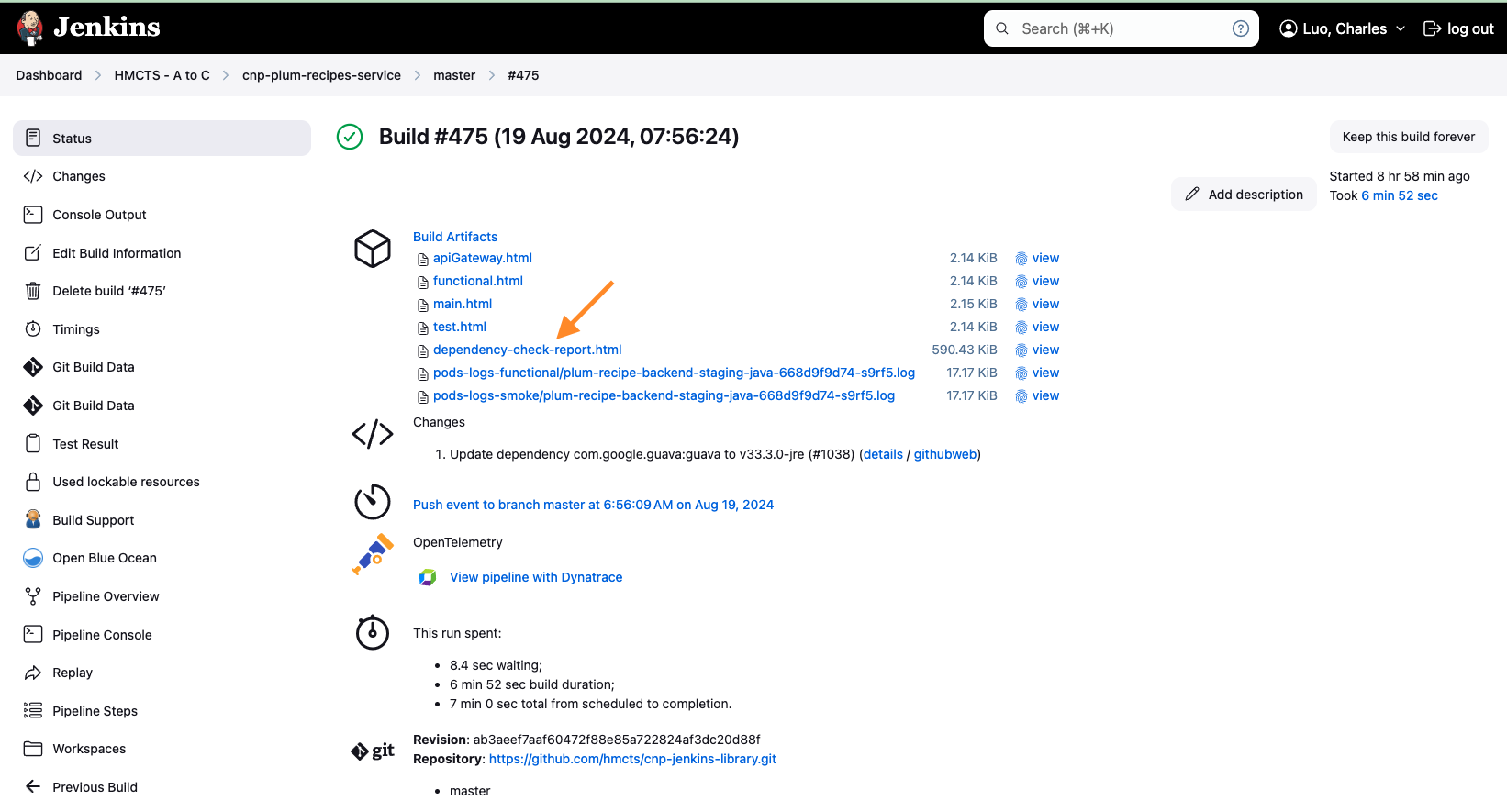

Step 2: Review the Report After running the dependency check, open the generated report (e.g., build/reports/dependency-check-report.html) to identify the vulnerable dependencies and note their current versions. To access the generated report in Jenkins, you can find it in the Build Artifacts.

Step 3: Upgrade Vulnerable Dependencies Once you’ve identified the dependencies that need upgrading, use the following steps:

- Check for Latest Versions:

Use the Gradle command to check for the latest versions of the dependencies that have vulnerabilities:

./gradlew dependencyUpdates -Drevision=release

Alternatively you can go to the Maven Repository and search for the dependency to find the latest version.

- Update Dependencies in build.gradle:

Manually update the version numbers of the flagged dependencies in your build.gradle file to the latest versions identified.

For example, if a vulnerable dependency was:

groovy

implementation 'com.example:some-dependency:1.0.0'

And the latest safe version is 1.2.0, update it to:

implementation 'com.example:some-dependency:1.2.0'

Repeat this for all vulnerable dependencies flagged by the report.

- Re-sync Gradle Dependencies:

After updating the build.gradle file, re-sync the Gradle project to apply the changes:

./gradlew build --refresh-dependencies

Step 4: Re-run the Dependency Check After upgrading the dependencies, run the dependency check again to ensure that the vulnerabilities have been resolved:

./gradlew dependencyCheckAnalyze --info

- Suppress false positives in the OWASP Dependency Checker

Because of the way the dependency checker works, false positives and false negatives may exist. We can suppress these false positives by providing

the dependency checker with the path to a suppression file in the build.gradle file.

dependencyCheck {

suppressionFile = 'path/to/suppression.xml'

}

Here is an example of how to configure the suppression file build.gradle Here is the aforementioned suppression file

- “NoSuchMethodError” when running the OWASP Dependency Checker

With the Dependency-Check v9.0.0 users may encounter issues with NoSuchMethodError exceptions due to dependency resolution.

Solution

You will need to pin some of the transitive dependencies to the versions that are compatible with the Dependency-Check. e.g.

dependencies {

constraints {

// org.owasp.dependencycheck needs at least this version of jackson. Other plugins pull in older versions..

add("implementation", "com.fasterxml.jackson:jackson-bom:2.16.1")

// org.owasp.dependencycheck needs these versions. Other plugins pull in older versions..

add("implementation", "org.apache.commons:commons-lang3:3.14.0")

add("implementation", "org.apache.commons:commons-text:1.11.0")

}

}